Gradient Boosting Decision Tree GBDT is a popular machine learning algorithm. Neural networks and Genetic algorithms are our naive approach to imitate nature.

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

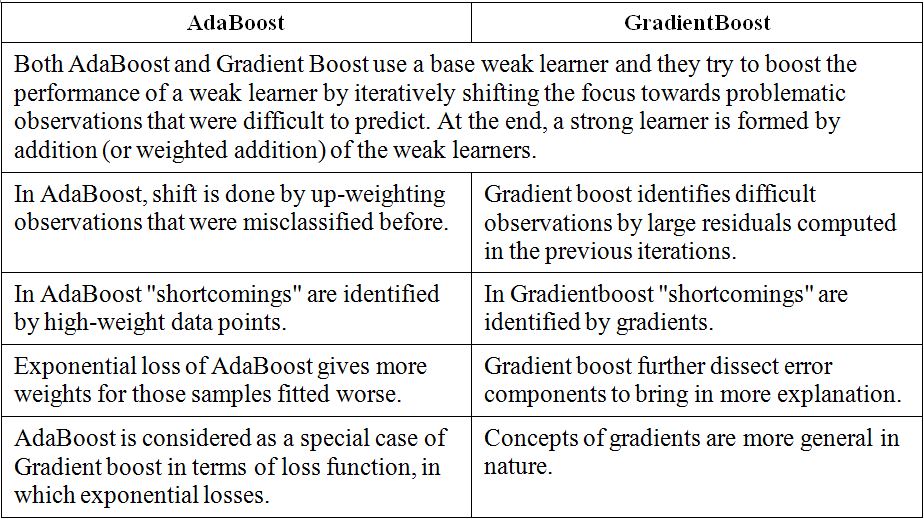

Gradient Boosting was developed as a generalization of AdaBoost by observing that what AdaBoost was doing was a gradient search in decision tree space aga.

. XGBoost delivers high performance as compared to Gradient Boosting. XGBoost is more regularized form of Gradient Boosting. It worked but wasnt that efficient.

AdaBoost Gradient Boosting and XGBoost. It is a boosting algorithm which is used in various competitions like kaggle for improving the model accuracy and robustness. XGBoost is an implementation of the GBM you can configure in the GBM for what base learner to be used.

It has quite effective implementations such as XGBoost as many optimization techniques are adopted from this algorithm. Difference between Gradient boosting vs AdaBoost Adaboost and gradient boosting are types of ensemble techniques applied in machine learning to enhance the efficacy of week learners. A Gradient Boosting Machine.

Decision tree as. Before understanding the XGBoost we first need to understand the trees especially the decision tree. This study demonstrates the efficacy of using eXtreme Gradient Boosting XGBoost as a state-of-the-art machine learning ML model to forecast.

It is based on gradient boosted decision trees. Gradient boosted trees consider the special case where the simple model h is a decision tree. 3 rows Extreme Gradient Boosting XGBoost XGBoost is one of the most popular variants of.

I think the Wikipedia article on gradient boosting explains the connection to gradient descent really well. While regular gradient boosting uses the loss function of our base model eg. Mathematical differences between GBM XGBoost First I suggest you read a paper by Friedman about Gradient Boosting Machine applied to linear regressor models classifiers and decision trees in particular.

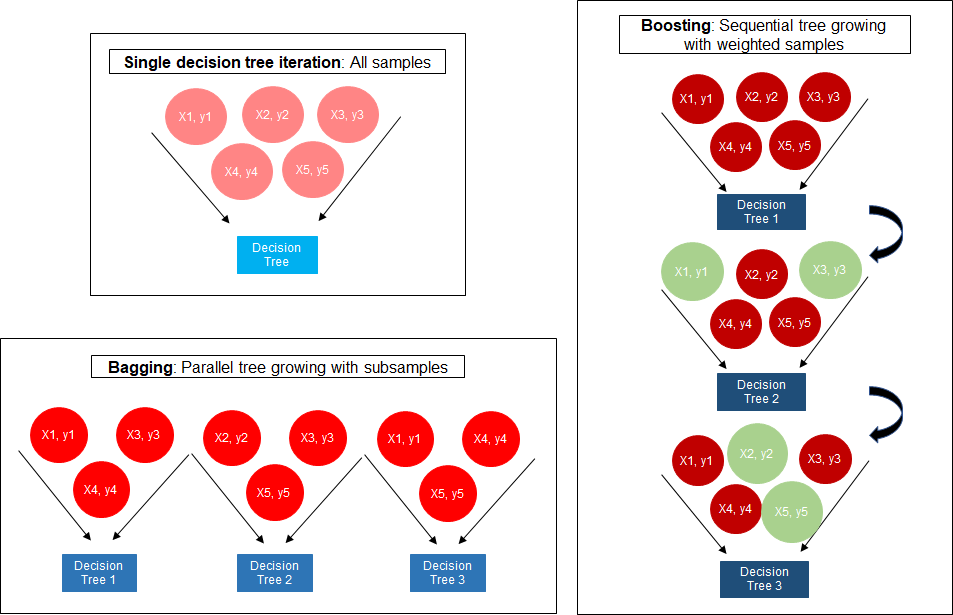

Gradient boosting is a technique for building an ensemble of weak models such that the predictions of the ensemble minimize a loss function. I think the difference between the gradient boosting and the Xgboost is in xgboost the algorithm focuses on the computational power by parallelizing the tree formation which one can see in this blog. XGBOOST stands for Extreme Gradient Boosting.

XGBoost computes second-order gradients ie. Gradient boosting only focuses on the variance but not the trade off between bias where as the xg boost can also focus on the regularization factor. Visually this diagram is taken from XGBoosts documentation.

GBM is an algorithm and you can find the details in Greedy Function Approximation. The different types of boosting algorithms are. The base algorithm is Gradient Boosting Decision Tree Algorithm.

What are the fundamental differences between XGboost and gradient boosting classifier from scikit-learn. XgBoost stands for Extreme Gradient Boosting which was proposed by the researchers at the University of Washington. XGBoost was developed to increase speed and performance while introducing regularization parameters to reduce overfitting.

AdaBoost Gradient Boosting and XGBoost are three algorithms that do not get much recognition. This algorithm is an improved version of the Gradient Boosting Algorithm. Boosting is a method of converting a set of weak learners into strong learners.

XGBoost eXtreme Gradient Boosting is a relatively new algorithm that was introduced by Chen Guestrin in 2016 and is utilizing the concept of gradient tree boosting. AdaBoost Gradient Boosting and XGBoost. AdaBoost Adaptive Boosting AdaBoost works on improving the.

I have several qestions below. A very popular and in-demand algorithm often referred to as the winning algorithm for various competitions on different platforms. I learned that XGboost uses newtons method for optimization for loss function but I dont understand what will happen in the case that hessian is nonpositive-definite.

Boosting algorithms are iterative functional gradient descent algorithms. Here is an example of using a linear model as base learning in XGBoost. It can be a tree or stump or other models even linear model.

They work well for a class of problems but they do. Decision tree based algorithms are considered best for smallmedium structured or tabular data. It is a library written in C which optimizes the training for Gradient Boosting.

AdaBoost is the original boosting algorithm developed by Freund and Schapire. Its training is very fast and can be parallelized distributed across clusters. Answer 1 of 2.

XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities. XGBoostExtreme Gradient Boosting is a gradient boosting library in python. The concept of boosting algorithm is to crack predictors successively where every subsequent model tries to fix the flaws of its predecessor.

However the efficiency and scalability are still unsatisfactory when there are more features in the data. In this case there are going to be.

Catboost Vs Light Gbm Vs Xgboost By Alvira Swalin Towards Data Science

Comparison Between Adaboosting Versus Gradient Boosting Statistics For Machine Learning

Xgboost Algorithm Long May She Reign By Vishal Morde Towards Data Science

A Comparitive Study Between Adaboost And Gradient Boost Ml Algorithm

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

Exploring Xg Boost Extreme Gradient Boosting From The Genesis

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

Gradient Boosting And Xgboost Note This Post Was Originally By Gabriel Tseng Medium

0 comments

Post a Comment